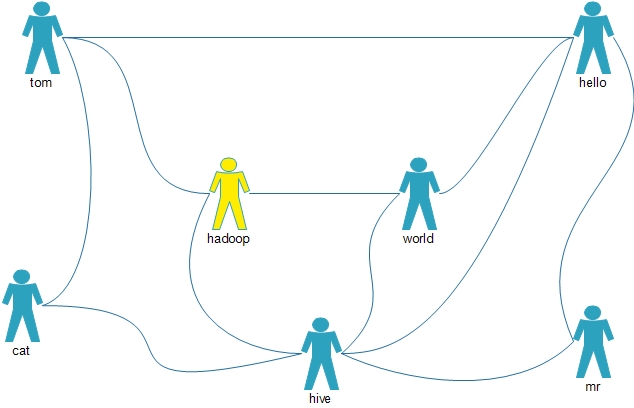

如图直接连线代表有好友关系

现在要根据这个图来给hadoop推荐好友

此算法的实现思路

1、得到所有的二度关系

某个用户的好友列表当中的好友 两两组合 = 二度关系

即不是好友但能通过两个线段就能连同

例hadoop与hello为二度关系

2、将上一步得到的二度关系 过滤掉 直接好友关系

得到了真正的二度关系

3、根据上一步结果 统计二度关系的值 得到亲密度

例如hadoop与hello的二度关系为3

从hadoop开始有三条路走两步就能到hello

4、根据亲密度 做降序排序 得到推荐列表

代码实现,主要代码:

数据

tom hello hadoop cat

world hadoop hello hive

cat tom hive

mr hive hello

hive cat hadoop world hello mr

hadoop tom hive world

hello tom world hive mr

第一行代表tom分别与hello,hadoop,cat有好友关系

* 第一个mapper得到所有的二度关系